The first step to understanding the status of a project, and knowing where to start working on it and prioritize tasks, is to conduct an audit. To do this, you need to know how to perform an SEO audit. This study will be more extensive the larger and more complex the website being analyzed. The result will be an x-ray of the website, which will serve as a guide for implementing the first changes, always seeking the best ratio of time spent to performance obtained. There are certain actions that should be carried out as a priority due to their nature. However, there are others whose order can be decided. In this case, the most appropriate thing is, as I mentioned before, to start with those that have the most visible effects in the short term.

Let’s begin.

1 – Crawl Analysis

The first thing we need to know is the number of URLs on our website that are crawlable by search engines and those that are not. From this information, we can determine the optimization of the web architecture, internal linking, and the status of the URLs based on our needs. This analysis can be divided into several sections for inspection:

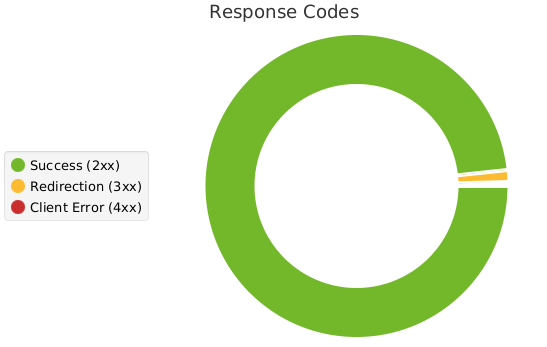

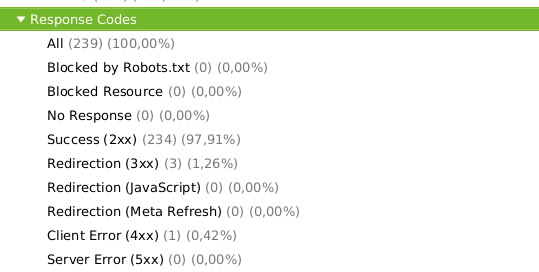

1.1 – Server Response Codes

This involves analyzing the status of the pages at the moment they are accessed by a user, or crawler.

Una herramienta fundamental para realizar las auditorías (y el SEO en general), es Screamming Frog. Con esta herramienta podremos obtener información de casi cualquier aspectos de la web. También podremos descargar esa información, combinarla, manejarla, etc… según nuestras necesidades. Una maravilla.

Mediante esta herramienta hemos podido ver, como en este caso, la cantidad de URL’s del proyecto, y cuales presentan alguna problema o característica destacada.

Aquí ya tenemos información sobre la que trabajar.

1.2 – File Types in the Project

It’s important to know the different file types in the project, as each file type requires different work and optimization. The work done on HTML pages is not the same as that done on CSS or JS files, nor is the optimization done on images.

1.3 – Robots.txt Configuration

This section is very important for crawling, as it is the first configuration element that crawlers encounter when they access our website. Using this file, we will provide crawlers with information such as:

The type of crawler we will grant access to our website.

The specific pages, or sets of pages, whose crawling we will block.

This is very important, among other things, to optimize the Crawl Budget, which is the resources (time) that a search engine uses to crawl our website. By telling crawlers, through the Robots.txt file, which URLs they can access, we can prioritize those we have worked on the most, or those that we are most interested in ranking. Likewise, we can block those where we don’t want crawlers to waste their valuable time.

Indicate which is the Sitemap file. This is very useful to make it easier for crawlers to read.

Correct configuration of this file is key, as its result must be aligned with the state of our website, its architecture and internal linking, our business proposal, and the content.

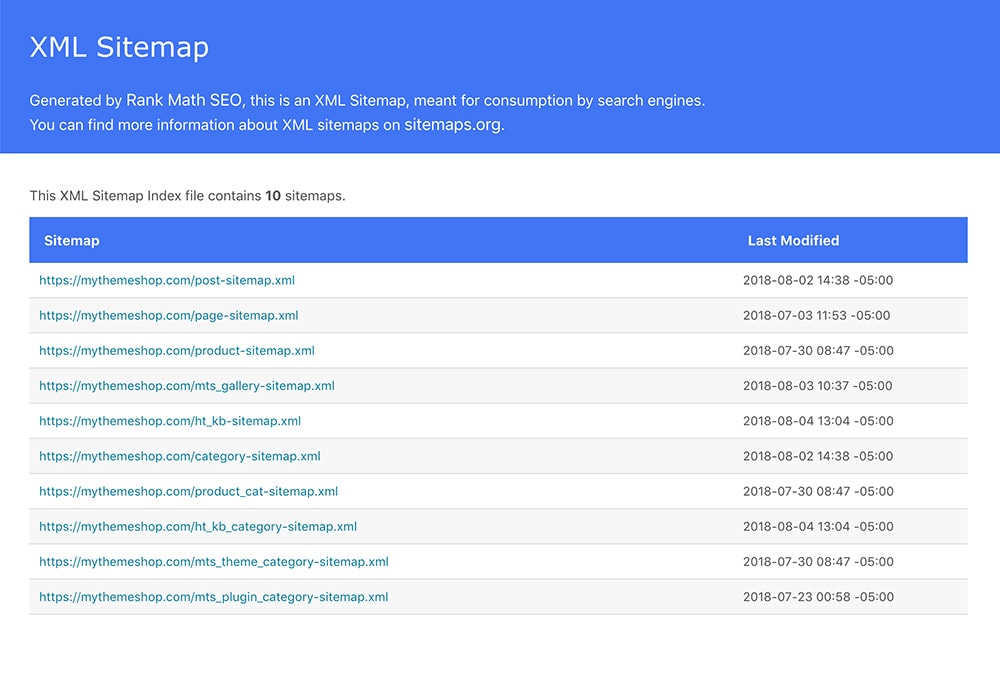

1.4 – URLs included in the Sitemap.xml

The Sitemap.xml, as its name suggests, is a map of the website that we present to both search engines and any user who wishes to consult it.

This map does not necessarily correspond to the actual site map, but rather will be an abstraction of it, presenting the website as we want the spiders to understand and crawl it.

Unlike Robots.txt, this file does not indicate blocked areas, but rather serves as help and suggestions regarding the elements to crawl.

In our audit, we must verify the existence of this file, how it is generated, and whether the URLs it contains match our objectives.

There are numerous tools to create these maps automatically, such as the WordPress plugins Yoast SEO and Rank Math. We provide guidelines, and the file is generated automatically. However, it’s never a good idea to leave it on automatic and forget about it; instead, we must ensure that the generated file is the correct one.

With the previous steps, we have an idea of the website’s overall crawlability. However, we also need to know which crawlable pages are actually being crawled. This can be done by analyzing server logs, which tell us which URLs are being visited, and by whom. A very useful tool for this is Screaming Frog Log Analyzer.

On the other hand, we must detect orphaned URLs or those that we don’t fully control.

2 – Indexing Analysis

Once we know the crawling status of the website, we need to know which pages are indexed by search engines and what indications we are giving about the indexing of those pages.

In this regard, we must keep in mind the logic from the previous section: which pages are indexable and which pages are indexed.

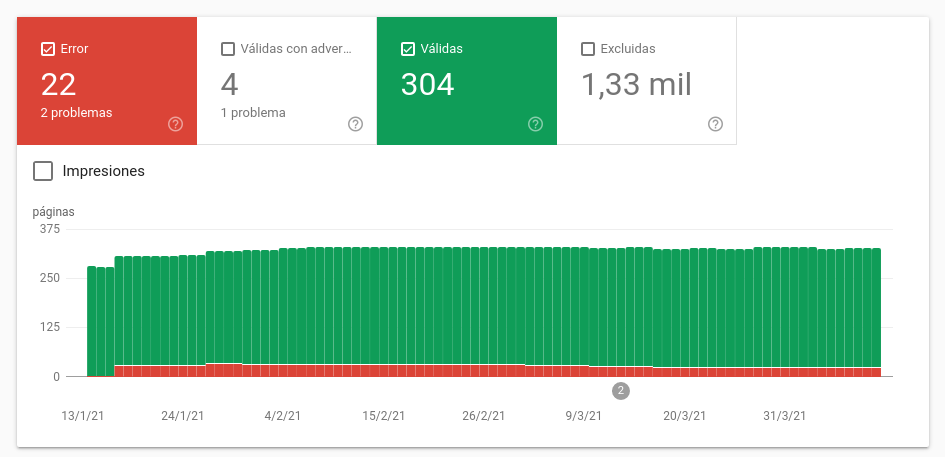

The best way to know the number of URLs indexed by Google is through the Google Search Console tool.

The graph above shows the number of indexed (valid) URLs, those not indexed (excluded), and those containing errors.

To define the indexing of a website’s URLs, there are two types of indications we should take into account and analyze:

2.1 – Indexing Directives.

They have the force of law. There are numerous types, but the most common are those that indicate whether a page should be indexed or not (index/no index), and those that indicate whether the links on a page should be followed or not (follow/no follow). We should check in the audit whether these indexing directives are aligned with our interests.

2.2 – Indexing Suggestions

They are not legally binding; Google can do whatever it deems appropriate with them. The most common suggestion is related to canonical tags. This tag tells search engines when a page has another reference URL that Google should consider more than the page itself. It is commonly used when there are pages with similar or duplicate content.

3 – Content Analysis

At this point, we should conduct a content analysis of our website. We will primarily focus on the following:

Similar content

Duplicate content

Thin content

Presence of structured data to organize content

En función del resultado de este este estudio, y de nuestros intereses, podremos implementar las medidas que consideremos oportunas:Depending on the results of this analysis and our interests, we can implement the measures we deem appropriate:

Merging pages with similar or duplicate content

Adding canonical tags to pages with less relevant content on top of more important ones

Redirects

Implementation or revision of Schema for information that can be presented as structured data

Development of a content strategy for corporate pages, blogs, categories, products, etc.

4 – On-Page Element Analysis

As a complement to content analysis, it’s necessary to perform the necessary checks on a URL’s SEO-sensitive elements. Specifically, it’s important to review:

URL titles. Title meta tag.

Descriptions. Description meta tag.

The correct hierarchy of H1, H2, etc. headings.

The organization of information in lists.

Highlighted text fragments, such as bold and italics.

The correct SEO configuration of images, with their titles and tags.

All these checks should be performed to ensure that On-Page SEO is correctly implemented and targeted at positioning based on studies such as keyword research, industry, and competitor analysis.

5 – Link Analysis and Relevance

As we’ve discussed in other articles, a website’s importance (PageRank) is a key ranking factor. We’ve also mentioned that this PageRank can be transmitted from one URL to another through links. These links can be classified according to several criteria. One of them is whether they point to a URL on the same domain or to a URL on another domain. In other words, whether they are internal or external links. This distinction is very important, as it will determine the aspects to consider and the actions to take.

5.1 – Internal Linking

Internal linking analysis will help us understand how importance is distributed across the different parts of the website, and the actions taken to achieve this distribution.

There is a term that defines this task of increasing Page Rank to those areas we consider priority: Page Rank Sculpting. There are different ways to achieve this distribution.

NoFollow links: we should study the existing nofollow links on the website to determine which types of elements are not receiving all the possible authority.

Link obfuscation: this technique converts any link into plain text in Google’s eyes. Its existence also gives us an indication of the intentions behind giving importance to certain URLs on the website.

URL depth: we should study the depth (number of clicks from the home page) of the website’s pages. As we know, the Dumping Factor causes a percentage of Page Rank to be lost with each jump between levels of depth.

Menus and other types of links: we must study and assess the existence of general menus, category menus, breadcrumbs, footer menus, etc. to determine whether the Page Rank distribution is being tailored to our interests.

On the other hand, we must study the information architecture to determine whether internal linking is truly fulfilling its function in efficiently executing this architecture.

5.2 – External Links

A thorough analysis of this section is essential, as external links are one of the pillars of SEO, being the element that speaks to our popularity and importance.

In the audit, we must use tools to understand the website’s link profile. This link profile will consist of all the links pointing to the website’s different URLs. Specifically, to understand this link profile, we must pay attention to data such as:

Number of inbound links

Source page of the link

Quality of the source page

Texts included in the anchor text (link anchor text).

Page Rank of the source page

Follow/nofollow ratio

Speed of link acquisition

The ideal link profile should be based on the best-ranked companies in our sector. In this sense, we must analyze our incoming links and plan the type of strategies to develop to match them with those of the most powerful websites. After this, we will try to expand and improve what we already have.

A link profile with many low-quality, spammy, or similar links can compromise our rankings in the future. It is even possible, if deemed appropriate, to clean up bad links, which can be done basically in two ways:

Requesting the source website to remove the link.

Notifying Google, through a disavow in Google Search Console, of the links pointing to us that we do not want to be taken into account.

6 – WPO Analysis

WPO (Web Page Optimization), or website performance, is increasingly becoming a relevant factor in web positioning. To optimize this performance, primarily represented by the website’s loading speed, we must consider aspects such as:

Performance of the chosen server, as well as its configuration

CMS used, template, plugins, etc.

Cleaning and optimizing the database

Clean and optimized code. At this point, it’s worth highlighting the handling of HTML, CSS, and JS elements. Sometimes, and depending on the CMS or template, they tend to be very heavy and poorly configured, which significantly slows down the website. It will be necessary to analyze and optimize the use of these codes.

Handling multimedia elements

Reviewing all elements will be key to achieving good loading speed and website performance. However, it’s important to check the status of Core Web Vitals, which are key indicators of website loading speed, and which we’ll cover in more depth in another article.

7 – Environmental Analysis

To perform a good audit, it’s very important to put the project we’re working on into context. No two projects are the same, nor are two audits the same, and this is due, in part, to the different sectors and contexts in which they occur. Auditing and developing an SEO strategy for a large e-commerce site in a highly competitive environment will not be the same as auditing and developing an SEO strategy for a corporate website or a less saturated business niche.

7.1 – Sector Analysis

It will be necessary to audit the sector in which the website is trying to position itself, with the idea of knowing what kind of game it’s playing. The sector will determine many of the website’s characteristics, and these characteristics will be key when working on SEO. Type of content, menus, ways of obtaining links, technical configuration, etc. will be decisive. It’s not just about doing things right in terms of SEO positioning in a general way. It’s about adapting it to each context in the most appropriate and efficient way possible.

7.2 – Competitor Analysis

The most competitive sectors will require greater efforts and investment to achieve SEO objectives. This is a key issue in an audit. We must be aware that positioning is dynamic, influenced by the actions of a large number of players at any given time.

If my colleagues in the SERPs are doing well, I should be strong. A link building or content strategy is useless if we don’t take this into account. In addition to having an idea of the efforts we need, understanding our competition will help us:

Discover content strategies that are working

See where they place links, to assess which ones we can replicate

Understand what types of SERP results are appearing for core searches that interest us: link, image, local, featured snippet…

Types of architecture used, highlighted areas, and information processing of the best-positioned websites.

And so on, until we’ve covered almost every aspect we consider relevant to study about our competition. I’ve left this point for last, as it’s not a “proper” part of the website audit, but perhaps it belongs at the beginning, since all the analyses and actions derived from the audit will be influenced by the environment in which the website project is developed.