We know that there are different areas or disciplines to consider in SEO. One of them is Technical SEO. We could understand it as the necessary fine-tuning of a website before carrying out traffic growth actions. It’s the necessary complement to Content SEO and Link Building. Below, we’ll break down all the aspects of these techniques and how to work on Technical SEO.

1 – Technical SEO as a Starting Point

All areas of SEO are necessary to position a website, but we can say that there is a certain hierarchy among them. This hierarchy may not answer a question of importance, but it does address which tasks must be completed before tackling others.

In this sense, we can affirm that before working on more extensive SEO, we must have the machinery fine-tuned so that all the efforts and resources invested, for example, in content and Link Building, are maximized and yield the best results.

Well, we can call this fine-tuning Technical SEO. Let’s say that all the actions in this branch of SEO are oriented toward two specific objectives:

Facilitate web crawling and indexing by search engines.

Generate the best possible user experience.

To achieve these objectives, it is important to pay attention to the following points:

2 – Server

The server is responsible for serving pages to both users and bots. A malfunction can make it difficult to access URLs, compromising rankings, to the point of pages being deindexed.

At this point, remember that all 5XX errors are server-related. But it’s not just errors that can affect rankings. A long wait time or problems rendering pages (especially JavaScript) can cause crawlers to access partially loaded versions.

For the server to be optimized and offer better ranking possibilities, its characteristics must be appropriate. In this sense, it is necessary to address issues such as:

The type of disk used. There are two options: HDD and SSD. For SEO, SSD drives are recommended, although they are more expensive.

Disk capacity. It’s good to have sufficient storage capacity, since the larger the disk space, the slower it will be to access and retrieve data.

It’s essential to have enough memory to serve our site’s pages.

Traffic capacity. It’s important to ensure we’re not affected by any traffic restrictions, so we must have sufficient contracted capacity.

This website is hosted on Webempresa servers.

3 – Domain Certificates

Having a secure certificate on your domain is very important both from a user perspective and when it comes to ranking in search engines.

Most servers provide these for free.

After installing the certificate, your browser may indicate that there are still insecure elements. This happens because calls to elements using the http protocol may remain in your code. To solve this problem, WordPress offers the following plugin: Really Simple SSL.

4 – Source Code

Cleaning and optimizing a website’s source code is very important when working on technical SEO. To do this, we can consider the following:

CMS and template used. It’s important to make sure the elements we choose are optimized.

Minify HTML, CSS, and JS files. For this, there are several WordPress plugins, such as Rank Math and Autoptimize.

5 – Databases

Another important element we must keep optimized is our website’s databases. It’s very common for these records to end up containing a lot of information we no longer need, and for other types of information to not be stored in the most efficient way.

Therefore, it’s essential to keep this under control, and ensure that queries and modifications to the databases are performed in the most optimal way possible.

For this task, we also have WordPress plugins that can help us, such as WP-Sweep, which performs tasks such as cleaning up revisions, unapproved comments, page metas, or orphaned posts, optimizing tables, etc. It’s definitely very useful.

6 – Crawl Optimization

When optimizing a website’s technical SEO, it’s important to configure and optimize how search engines crawl your website. To do this, it’s essential to consider the following aspects:

6.1 – Server Response Codes

First, we need to understand the responses crawlers receive from servers when they attempt to access resources to crawl the website. Analyzing these response codes will be important because server access is critical for SEO. The types of response codes we may encounter are:

2XX Codes: Request resolved successfully

3XX Codes: Redirects

4XX Codes: Resource inaccessible because it does not exist or is prohibited

5XX Codes: Server failure

To analyze these response codes, a good option is to use a crawler like Screaming Frog. Based on the response codes obtained, we will have to work to resolve any potential issues.

6.2 – File Types

Another important aspect when analyzing crawling is knowing the type of files the crawler will encounter as it navigates the website. At this point, we’ll pay special attention to the most common file types these crawlers access: HTML, CSS, JS, and images.

Regarding the handling of these files, we must ensure the following:

Their access is enabled (recommended in most cases).

In the case of HTML, CSS, and JS, they must be minified or optimized in some way.

In the case of images, they must be well-labeled and optimized in terms of size and weight.

6.2 – Optimizing Our Crawl Budget

This key concept when working with crawling is the Crawl Budget. It refers to the amount of resources (time) the search engine uses to crawl our website. This time is variable and usually changes depending on the age or authority of the domain, or the type of website being worked on.

Assuming this limitation in resource allocation, it is necessary to indicate to the crawlers which URLs or areas of the website are priority for crawling, since we want to index and rank them first.

We will provide these instructions using the following files: sitemap.xml and robots.txt

6.3 – Sitemap.xml

As its name suggests, this involves creating a file containing a map of our website’s structure. We’ll preferentially include those URLs we consider relevant and whose indexing and ranking are a priority.

Although there are WordPress plugins that automatically generate these types of files based on the settings we specify (such as Rank Math and Yoast SEO), it’s very important to perform manual monitoring to ensure these files contain the exact information we want.

6.4 – Robots.txt

If the Sitemap.xml file helps search engines crawl our website, the Robots.txt file will indicate the following:

Which crawlers (User Agent) can access it, and which ones cannot.

Which URLs, or sets of URLs, are allowed (Allow), and which are not (Disallow). To indicate this, you can specify specific URLs or use regular expressions.

Sitemap.xml path. Because this file is the first one crawlers look for, it’s a good idea to tell them where to go to find the sitemap.

The configuration and everything that can be included in this file, as well as its scope, warrant a much more in-depth study. With the above information, we can already get an idea of the potential and importance of Robots.txt for SEO.

6.5 – Breadcrumbs

Breadcrumb menus are elements that indicate, through links, the current URL path. They are important elements from the following points of view:

User Experience.

Page Rank distribution.

Ease of crawling for search engines..

6.6 – Pagination

Pagination is very common on web pages. It’s used for blog posts, products, and any type of content that contains multiple elements.

For search engines to crawl the URLs that are part of a pagination chain, it’s necessary to indicate this correctly and include the appropriate tags (rel=”next” href… and rel=”prev” href…).

This way, we’ll tell the crawlers that they’re facing a paginated page and which URL to visit next.

6.7 – Arquitectura Web

When it comes to facilitating website crawling, it’s very important that the structure facilitates crawlers’ movement between the different URLs and different sections of the website. For this, we need a clean, optimized, and well-linked structure.

6.8 – Orphan Pages

For search engines to properly crawl your entire website, it’s very important that no URL is linked to at least one other page. Depending on the importance of the URL, there should be more links to that page.

6.9 – Page Depth

There is one important aspect when organizing the architecture and crawling of a website: the depth at which a given URL is located. This depth represents the number of clicks required to reach that URL from the home page. In addition to making it difficult to access the URL, the fact that a given website is at a certain depth affects it to a greater or lesser extent by the so-called Dumping Factor.

The Dumping Factor is a correction made to a URL’s Page Rank based on its depth. It is estimated that for each level away from the home page, 15% of this Page Rank is lost.

6.10 – Server Logs

It’s important to keep track of what happens when crawlers visit and navigate our website. Server log analysis is very useful for this. We can analyze the logs that Google, for example, leaves on our server when they visit it with tools like Screaming Frog Log Analyzer. This way, we’ll know if the settings we make are effective when setting our crawling preferences.

7 – Indexing Optimization

When it comes to telling search engines what preferences we have regarding how we want pages to be indexed, we have several options.

7.1 – Bot access to pages

For search engines to index our URLs, we must first allow them to access them. As indicated in previous sections, we will do this by properly configuring the Robots.txt file and its Allow and Disallow directives.

7.2 – Indexing Directives

There are two types of instructions we can give crawlers to tell them how we want them to treat a specific page and its elements: directives and suggestions. As the name suggests, the former are mandatory for search engines, while the latter are suggestions for the search engine to consider.

So, focusing on indexing directives, there are what are called meta-robots tags. These tags have two basic parameters, each of which can take two values. We indicate:

Indexing parameter: This parameter tells crawlers whether or not the page should be indexed. Its possible values are Index / No Index.

Follow parameter: this tells crawlers whether they should follow and crawl the links found on that page. Its possible values are Follow / No Follow.

7.3 – Indexing Suggestions

As we’ve already mentioned, there are other types of indications that provide crawlers with information about how they should interpret certain aspects of the website. Ultimately, they are the ones who decide how to use the information included in the directive.

These indexing suggestions are as follows:

Canonical: This is a tag that indicates, within a URL, the main URL related to the content or elements that the URL contains. This is best explained with an example: if we have two URLs, for example, product listings, that have very similar content, using the <link rel=”canonical” href=”https://www.paginabuena.com/” /> tag, we indicate that all consideration should fall on this URL, which is the canonical one.

Paginations: As we have indicated in previous sections, it is necessary, in the case of paginations, to indicate to the crawlers which are the previous and next URLs of the paginated series. This is done with the next and prev tags.

7.4 – Duplicate Content

To facilitate search engines’ understanding of our website, and therefore its indexing, it is very important that it does not contain duplicate content. Furthermore, this could lead to keyword cannibalization.

It is important to consider merging pages with similar content or using indexing directives and suggestions to optimize this process.

7.5 – Auditing Redirects

Redirects consume a lot of resources and can cause many problems. This happens when there are infinite redirects or very long redirect chains. These cases can cause indexing problems, in addition to consuming a lot of resources, which can lead to poor use of the crawl budget.

8 – WPO and Rendering

Web performance optimization is an increasingly important factor when it comes to website ranking. In this sense, there are several aspects to consider to achieve optimal website performance. We discussed some of these aspects that influence WPO at the beginning of this article, such as the selection of the server and its features, and the optimization of code and databases.

8.1 – Loading Time

Loading time is essential for good SEO ranking. First, because it is closely related to user experience, which is an aspect that search engines take into account.

Furthermore, optimizing loading time makes crawlers’ jobs easier. A good loading time means less crawl budget is consumed on the first load of the website and also ensures that the crawler doesn’t start scanning a partially loaded website.

8.2 – Page Size

The size of the page that the servers have to load is also an important factor affecting WPO. The larger the page size, the slower and more difficult it is to process the URL. This is why we should try to minimize the size of our website’s pages.

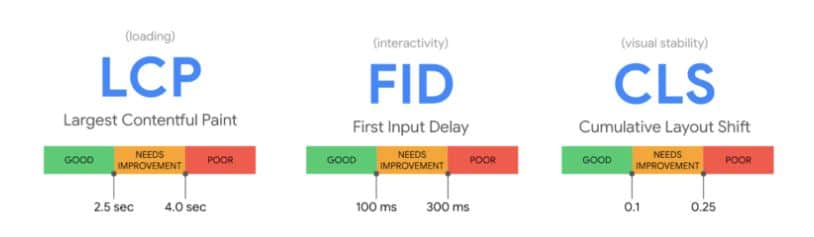

8.2 – Core Web Vitals

Within the set of factors related to WPO, there is a group that is gaining increasing importance, called Core Web Vitals. The factors in this group are related to the time it takes for a user to begin interacting with the requested URL.

These factors are the following:

LCP: Largest Content Panitful. Indicates the time it takes for the largest element in the viewport to load. This metric is intended to reflect a user’s perception of speed when loading a website.

FID: First Input Display.This metric measures the time it takes for a website to offer some opportunity for user interaction. The lower the FID, the better the experience.

CLS: Cumulative Layout Shift. It measures the cumulative loading time of all design changes. It measures how long it takes for a website to load and become stable.

Below is an image showing the ideal values for Core Web Vitals:

9 – Ranking Elements

Once our website is crawlable and indexable, it is necessary for search engines to consider it appropriate and interesting for indexing. This can be achieved by sending relevance signals to URLs. To send these signals, we have several options, such as the following:

9.1 – Internal and External Links

Links are the foundation of the internet, and the signals sent through them are highly valued by crawlers. If we establish internal links that provide the website with a good architecture and help highlight the most prominent URLs or areas, we will be helping its indexing by search engines.

On the other hand, if we send authority signals through external links, we will also be indicating to search engines the importance of a given URL, thus increasing its indexing chances. For external links to have the desired effect on URL indexing, it is very important that these links be of a certain quality and placed on websites or URLs with a certain authority. Failure to do so may hinder indexing, or even jeopardize it, if search engines consider the links spam.

9.2 – Content Groups

Another way to ensure our URLs are indexed is to group them into groups of pages with a specific semantic relationship. These are known as Clusters. These clusters, by containing related URLs that also link to each other, will gain authority as elements that address a specific topic.

Examples of clusters include the categories of a blog or online store, or the verticals of a monetization website.

10 – Clickability

To achieve visibility and therefore clickability, it is very important that our URLs appear prominently on search pages. This means appearing in the top positions of Google’s search results pages, the SERP. But not only that. Today, SERPs contain results in different formats to meet users’ search intent.

Therefore, we must adapt the format of our content to be likely to appear according to the user’s search intent (images, local results, products, hotel reservations, etc.).

Furthermore, when creating our content, we must keep in mind that it is possible to reach position 0, or “featured snippet.”

For all of this, the format we give our content is very important.

10.1 – Structured Data

One way to format our content is through structured data or Rich Snippets. Through code, we tell search engines the type of content they encounter when they access a specific URL. This way, you’ll be able to better understand the content and present it in the SERPs in a structured way that makes it easier for a user with a specific search intent to understand.

10.2 – Featured Snippets

As we mentioned previously, it’s important to design the content we offer with SERP position 0 in mind. To do this, when a user performs a specific search, likely with informational intent, we must prepare the content so it can be displayed in this position 0, understanding the short answer or list format that elements in this position generally have.

Note that to be a candidate for appearing in this position 0, it is necessary to first be among the first results in the SERP for that search.

< /a>

< /a>